I am a second year Ph.D student from School of Computer Science, Funda University and co-supervised and sponsored by Shanghai AI Laboratory.

My research interest includes

- natural language processing,

- efficient machine learning and downstream adaptation and

- alignment of large language models in the Healthcare/Clinical domain.

I am fortunate to be advised by Prof. Yu Wang and Prof. Ya Zhang.

🎓 Educations

- 2023.06 - Current,

Fudan University, School of Computer Science, Shanghai, Ph.D.

Fudan University, School of Computer Science, Shanghai, Ph.D. - 2019.09 - 2023.06,

Shanghai Jiao Tong University, School of Electronic Information and Electrical Engineering, Shanghai, Bachelor Degree

Shanghai Jiao Tong University, School of Electronic Information and Electrical Engineering, Shanghai, Bachelor Degree

📝 Publications

Highlight

Jiang, S., Liao, Y., Zhang, Y., Wang, Y., & Wang, Y. (2025). Fine-tuning with Reserved Majority for Noise Reduction. In The Thirteenth International Conference on Learning Representations. [Link]

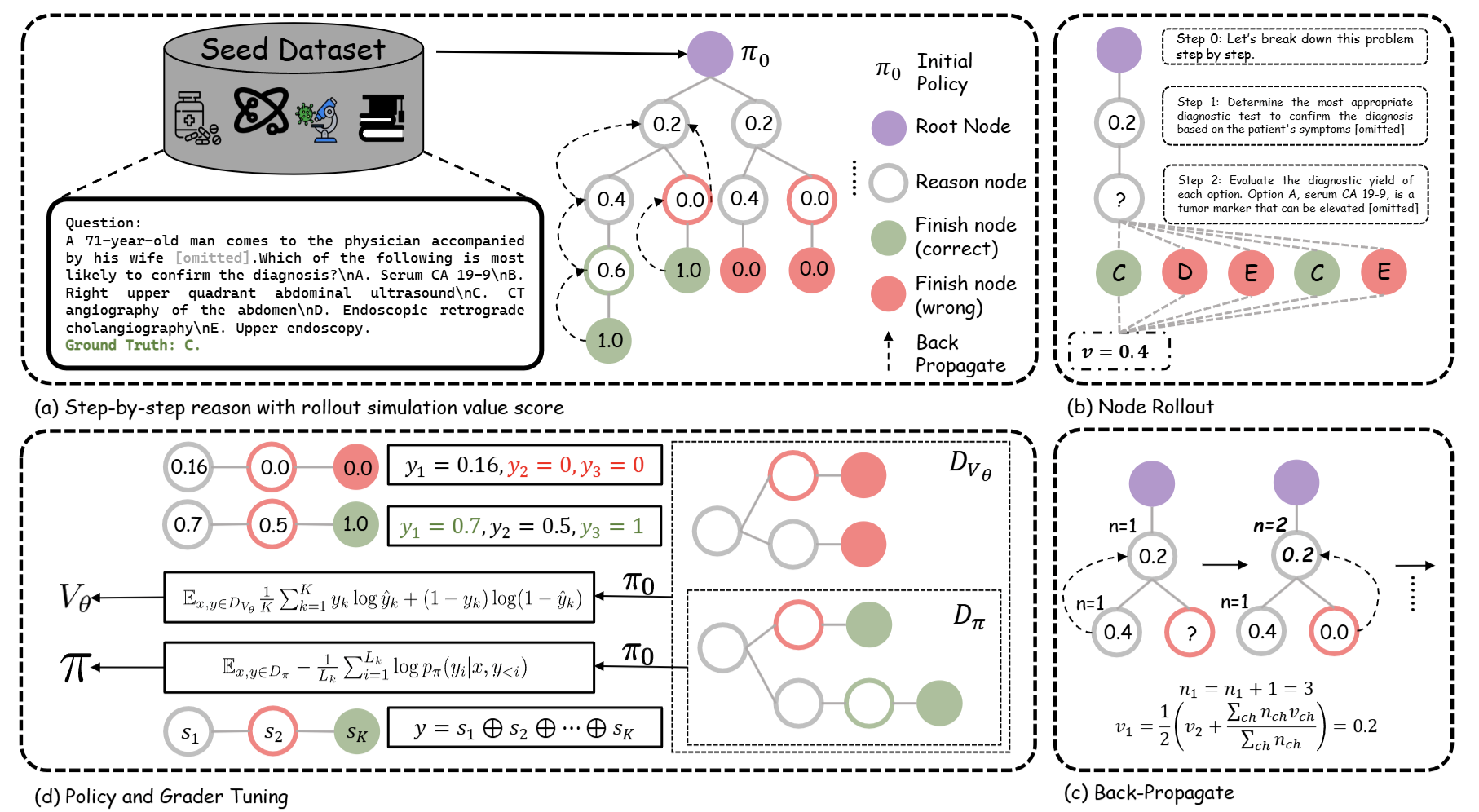

Jiang S, Liao Y, Chen Z, et al. MedS $^ 3$: Towards Medical Slow Thinking with Self-Evolved Soft Dual-sided Process Supervision[J]. AAAI 2026.

[Link]

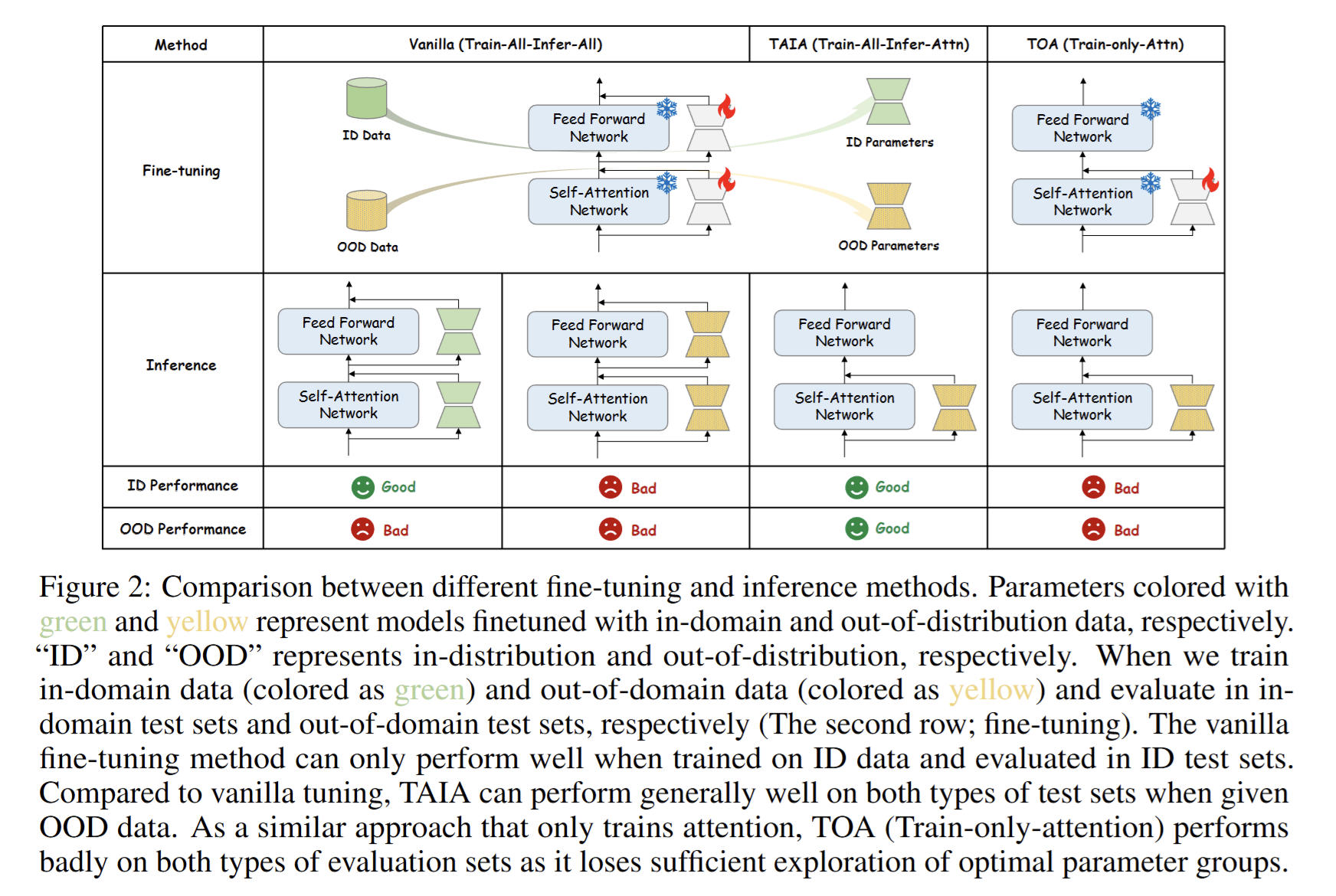

Jiang, S.*, Liao, Y.*, Zhang, Y., Wang, Y., & Wang, Y. (2024). TAIA: Large Language Models are Out-of-Distribution Data Learners. In Advances in Neural Information Processing Systems (pp. 105200–105235). Curran Associates, Inc.. [Link]

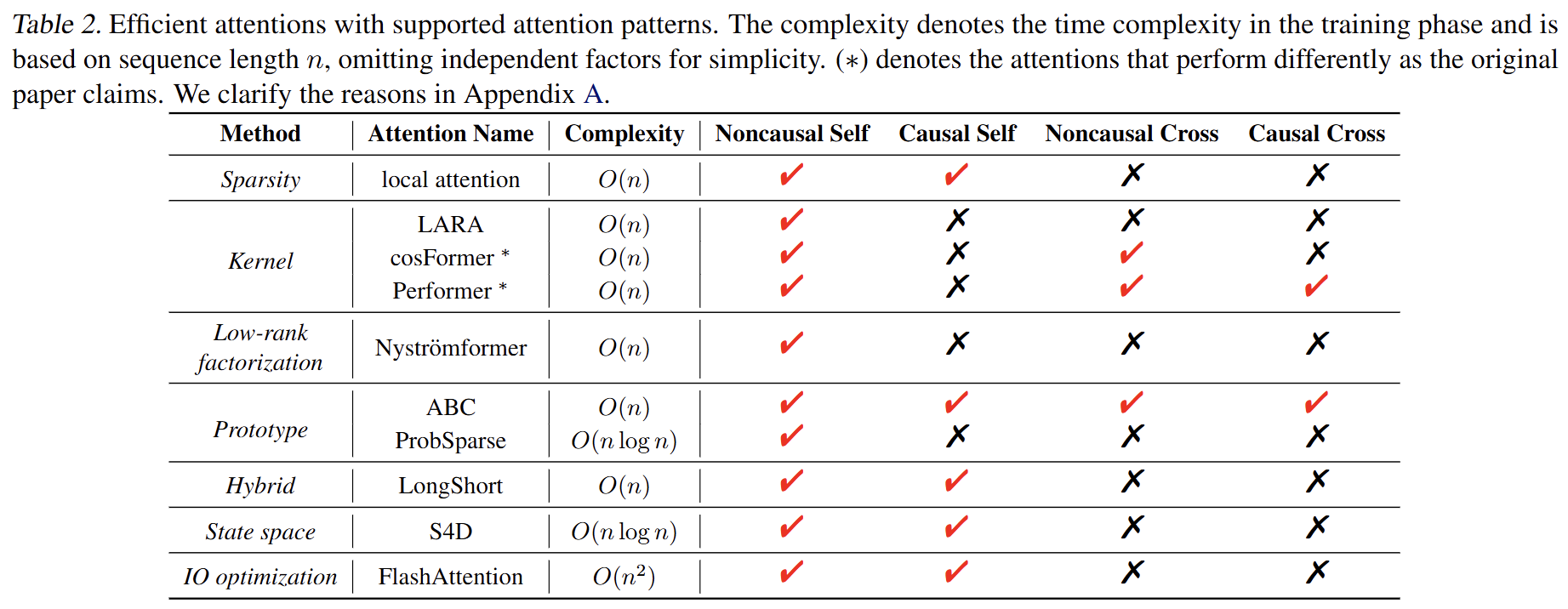

- Zhang, J.*,

Jiang S.*, Feng J., et al. Cab: comprehensive attention benchmarking on long sequence modeling[C]//International Conference on Machine Learning. PMLR, 2023: 41194-41218.

[Link]

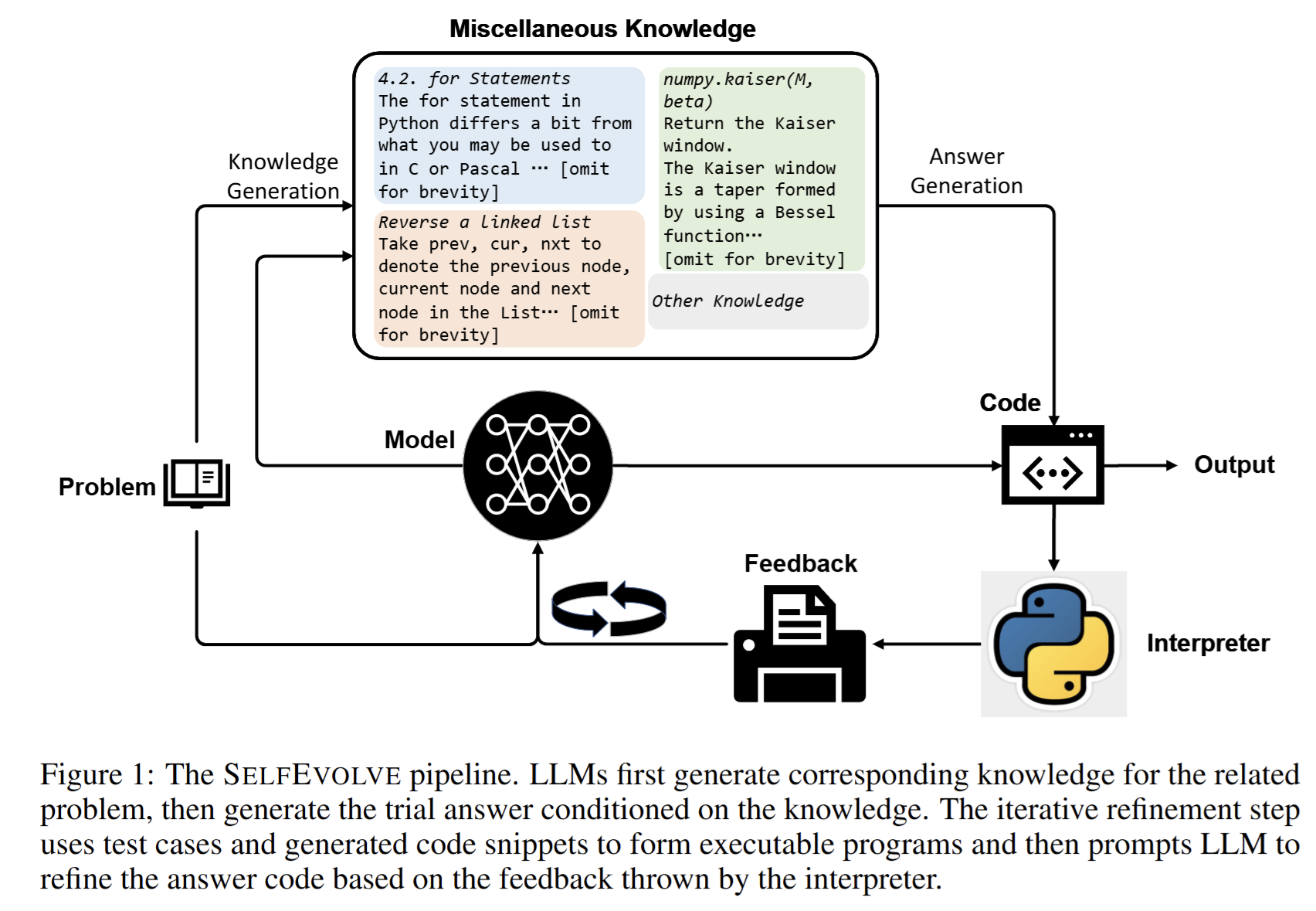

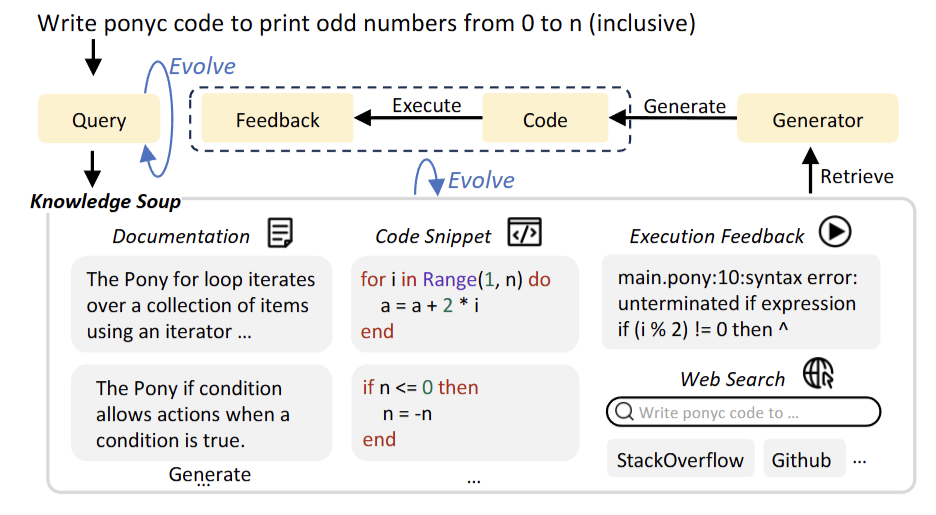

Jiang S., Wang Y., & Wang Y. Selfevolve: A code evolution framework via large language models[J]. arXiv preprint arXiv:2306.02907, 2023.

[Link]

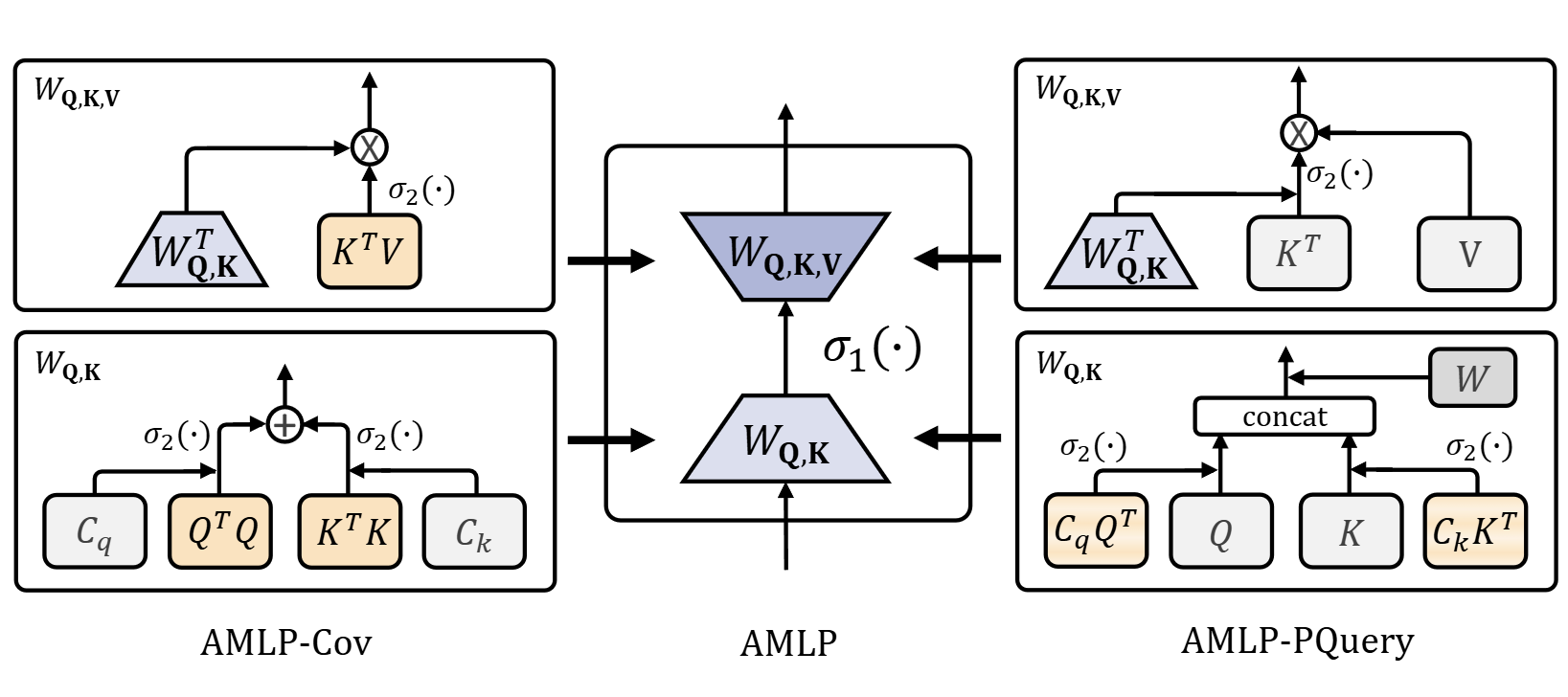

Jiang S., Zhang J., Feng J., et al. Attentive Multi-Layer Perceptron for Non-autoregressive Generation[C]//Joint European Conference on Machine Learning and Knowledge Discovery in Databases. Cham: Springer Nature Switzerland, 2023: 612-629.

[Link]

Others

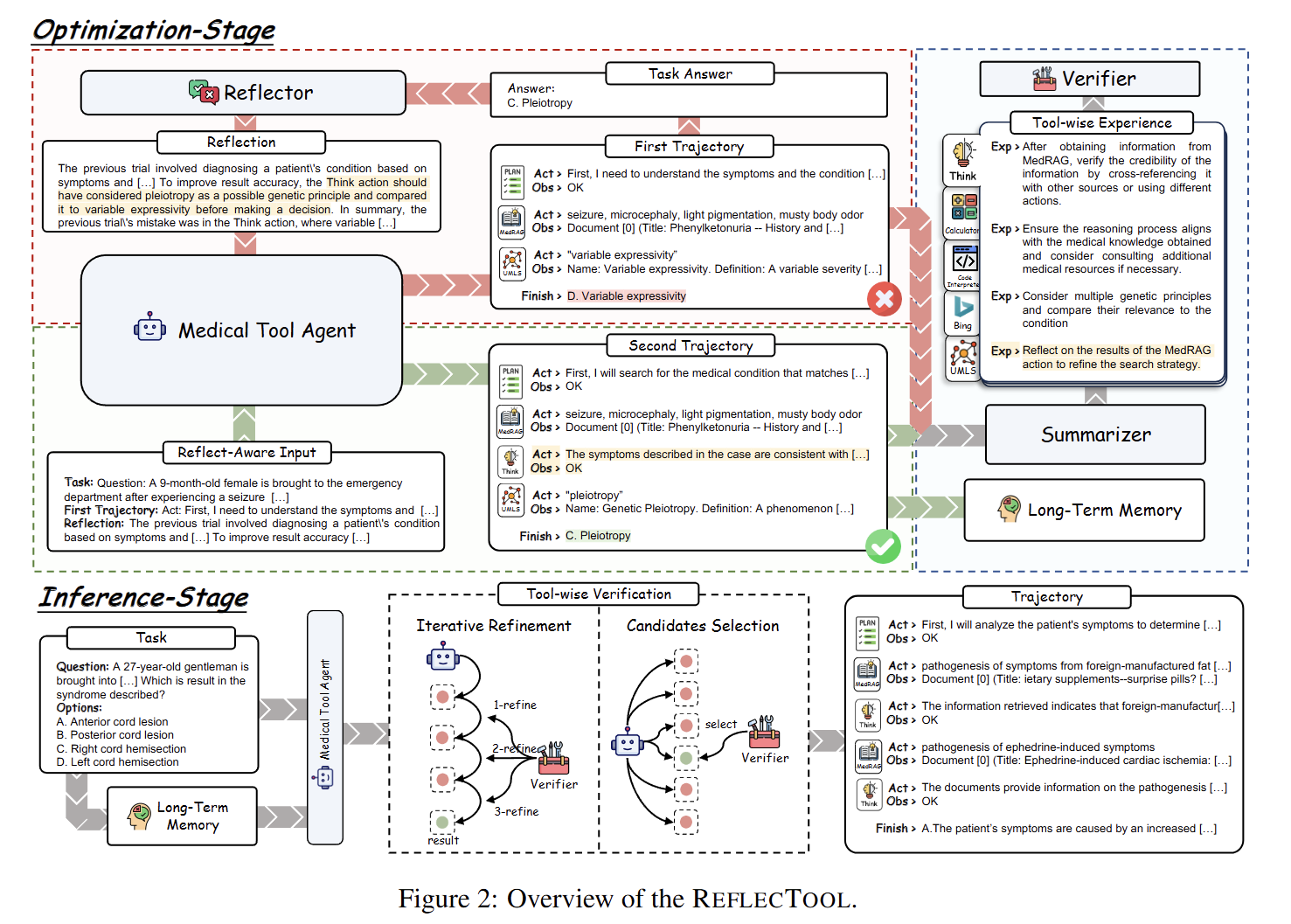

-Liao Y., Jiang S., Wang Y, et al. ReflecTool: Towards Reflection-Aware Tool-Augmented Clinical Agents. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 13507–13531, Vienna, Austria. Association for Computational Linguistics.

[Link]

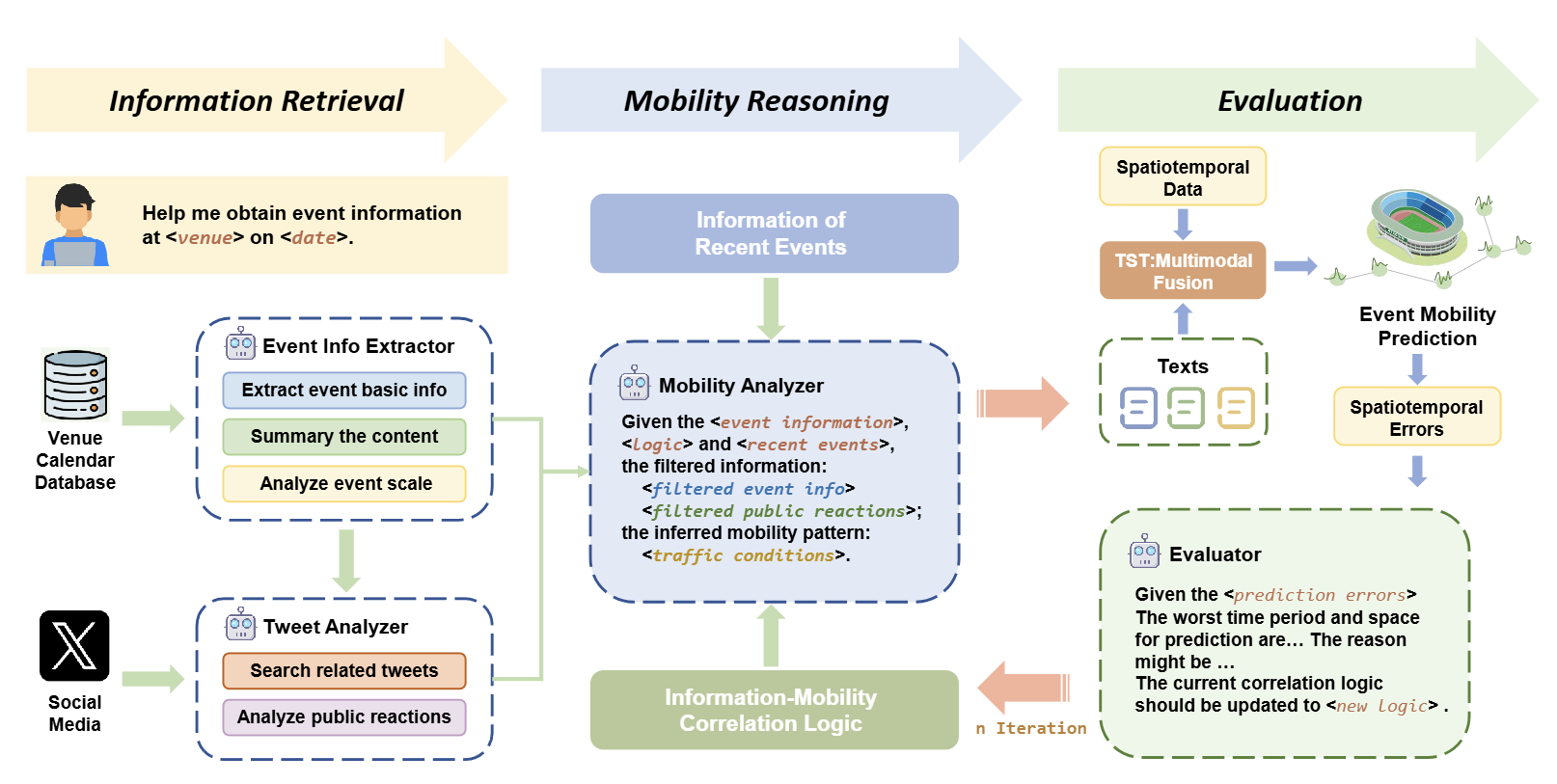

-Chen, R., Jiang, S., & Huang, W. (2025). SeMob: Semantic Synthesis for Dynamic Urban Mobility Prediction. In Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing, pages 15346–15366, Suzhou, China. Association for Computational Linguistics.

[Link]

- Liao Y*,

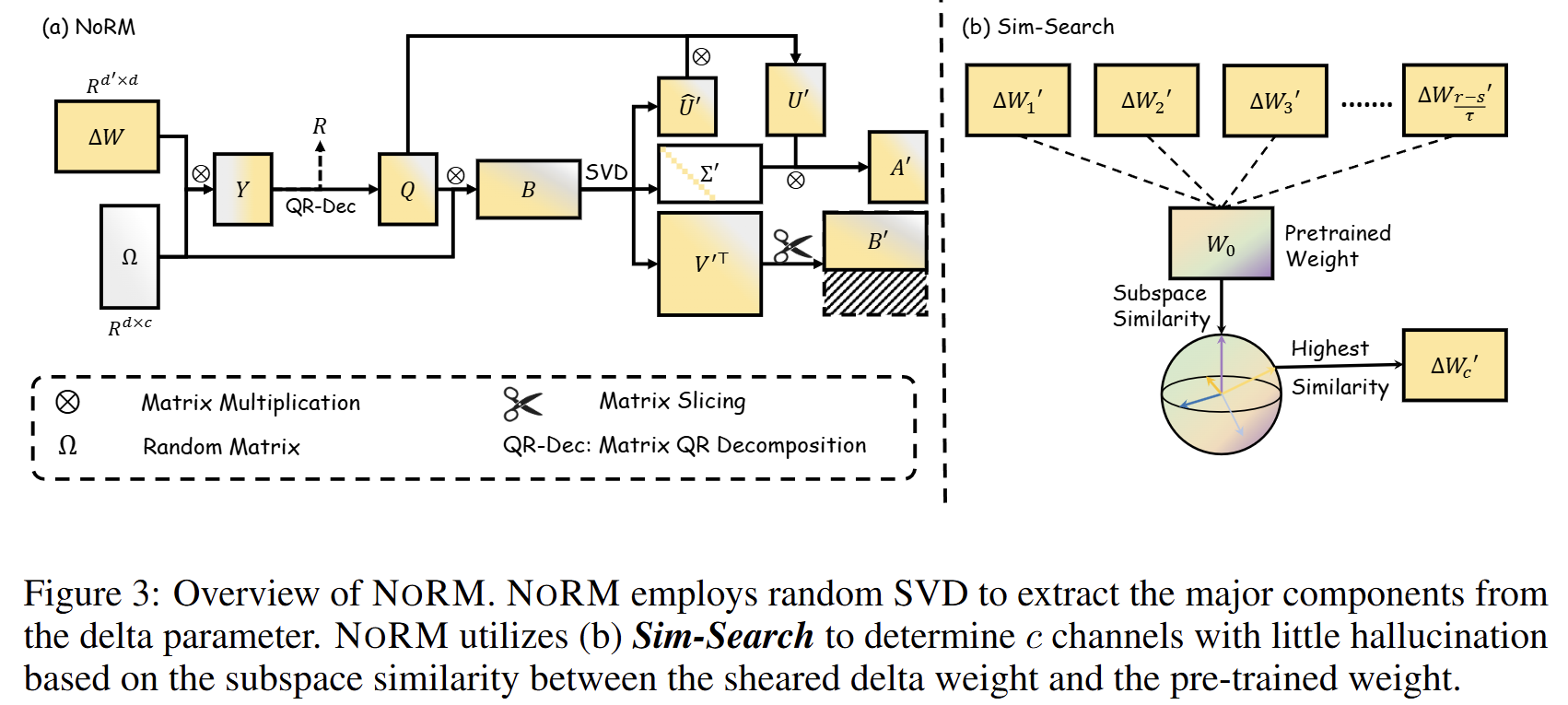

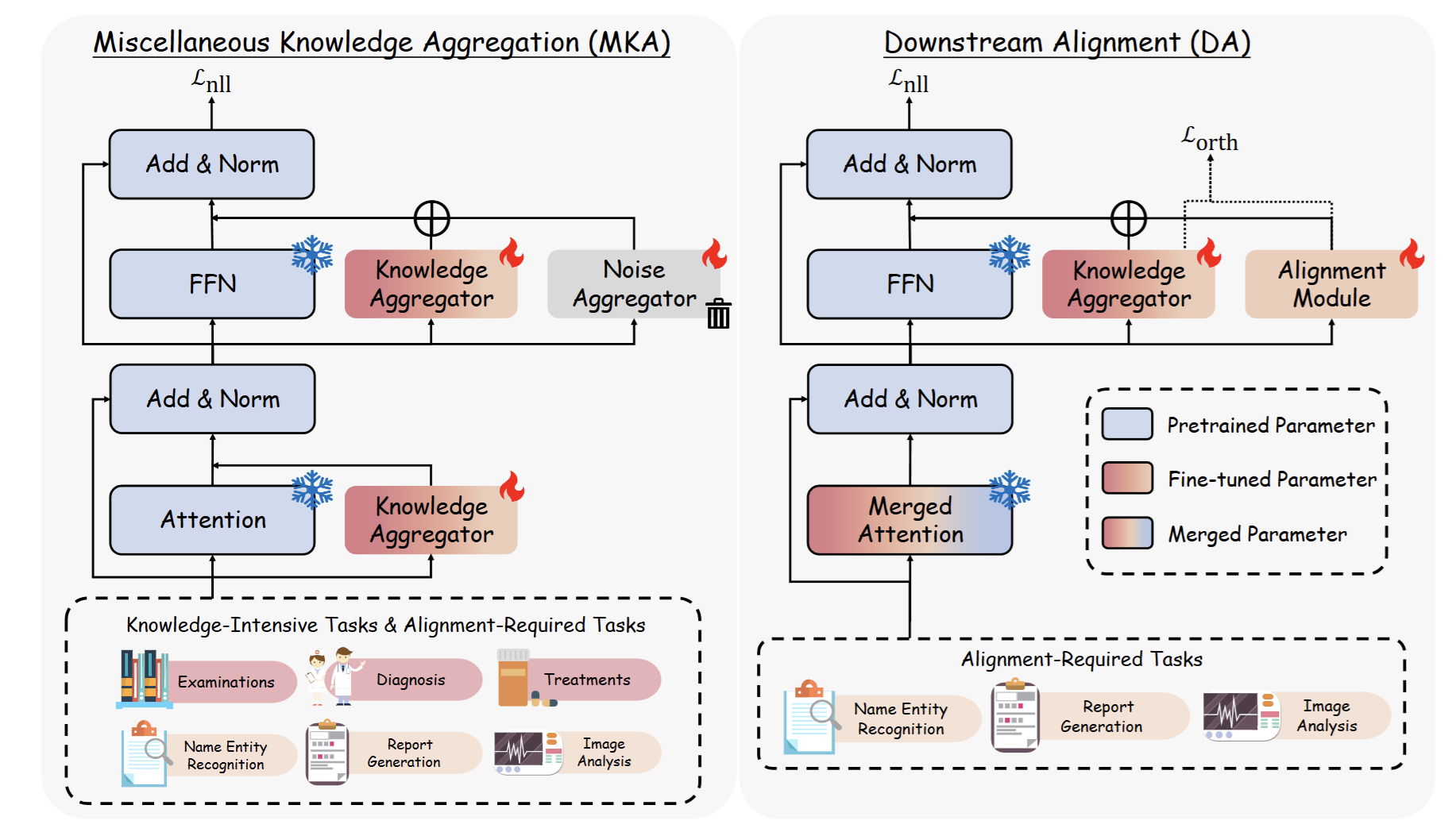

Jiang S*, Chen Z, et al. MedCare: Advancing medical LLMs through decoupling clinical alignment and knowledge aggregation[C]//Findings of the Association for Computational Linguistics: EMNLP 2024. 2024: 2538-2554.

[Link]

- Su H,

Jiang S, Lai Y, et al. EvoR: Evolving Retrieval for Code Generation[C]//Findings of the Association for Computational Linguistics: EMNLP 2024. 2024: 2538-2554.

[Link]

💻 Internships

- 2022.01 - 2023.04, Shanghai Artificial Intelligence Laboratory, Intern Researcher